Source-to-Source Compilation based on Kernel Recognition

Background

Source-to-Source compilers serve as an assisting tool for most migrating and optimizing compilers. Compiler performance might vary depending on the input source code. Most compiler user's guides recommend a special form of the input code in order to boost the compiler's capabilities for optimizing for a given platform, and this normally differs from vendor to vendor. Additionally, to perform the recommended transformations is a time-consuming process.

A source-to-source compiler is a tool that transforms automatically the input code into a more suitable source for a given target compiler. One of the most challenging problems for this tool is to determine which transformations at the source level will result in a benefit for the compilation process ahead. The aim of this project is to propose a new methodology to handle this general problem.

Our Approach

We focus on a source-to-source compiler that specifically assist compilers in the field of embedded systems. This domain comprises a broader collection of architectures and requires higher code quality, in terms of performance, code size, etc. Since compiler support for the C programming language is available for most embedded processors, we assume as the tool's input: a c-source code.

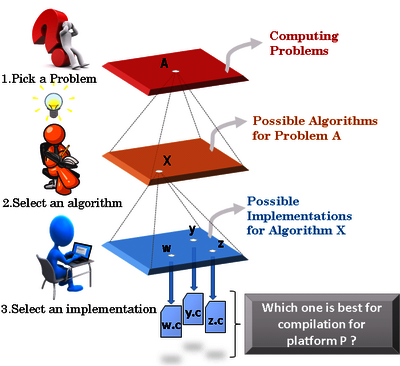

We believe that a source-to-source transformation is basically the search of a new implementation. Actually, the selection of an implementation is one of the steps that a programmer must execute in order to generate any source code. The following picture illustrates the programming process, usually manually performed:

Every step represents a search in a given space. From all the possible computing problems, the programmer has one to solve (A). From all the possibles solutions or algorithms that solves the problem, the programmer selects one to be implemented(X), and from all the possible implementations in c-code, the programmer selects one to be programmed (for example, w, most likely the programmer prefers a readable and understandable version, than a platform-customized version).

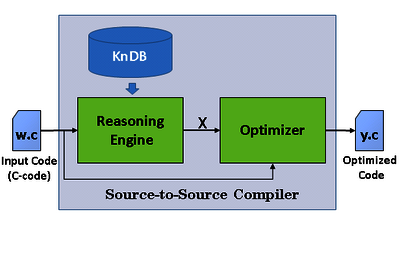

A source-to-source compiler is a tool that looks for a new implementation that suits better a given platform. Using this perspective, our approach tries to step back in the programming process by looking for information about the algorithm (X), and based on that new knowledge the tool reselects an implementation within the corresponding search space (z or y, for example). This tool concept is illustrated in the following picture:

The main idea behind this approach is to imitate what a human would do to re-implement a code. First to understand the code, then to transform it. To add some rationality to our tool, we supply it with two capabilities:

- Knowledge Representation: to store what it knows (in a knowledge database, KnDB).

- Automated Reasoning: to use the stored information to answer questions and to draw new conclusions (Reasoning Engine).

In this source-to-source compilation project, ICE develops an automatic tool that takes portable ANSI-C code as its input, and based on pre-configured additional knowledge, the tool performs a recognition process to identify pieces of code (or kernels) that represent a meaningful functionality within the algorithm of the code. In other words, it tries to "understand" at least partially the algorithm underneath the code. Then it uses this information to look for a new implementation of the input source code.

Project Goals

The goal of the "source-to-source compiler based on kernel recognition" research project is to find a methodology that can be used by our tool to detect within the input code (if present) any of the algorithmic kernels supplied in the knowledge data base for which an optimization is known to be beneficial.

Specific Objectives:

As a first step we work on a feasibility study, where our main objectives are:

- To evaluate possible intermediate representations that serve as knowledge representation, a promising one is the program expression graph (PEG).

- To analyse possible detection algorithms to enable the automated reasoning of our tool.

- To analyse possible tool flows, for which the coverage and the applicability can be measured.

Case Study

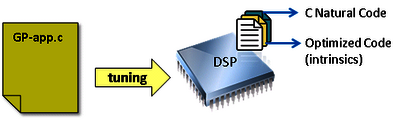

To work on our specific objectives a case study has been proposed. We analyse the case in which our tool is assisting an auto-tuning compiler, where a general-purpose application needs to be customized for a given platform, for example a Digital Signal Processor (DSP).

The vendor supplies a library of customized intrinsic functions, which contain platform-dependent optimizations. Beside these customized kernels, a description in c-code of each kernel functionality is also supplied (natural C kernel/ platform-independent kernel).

The current tool flow, is depicted in the following picture:

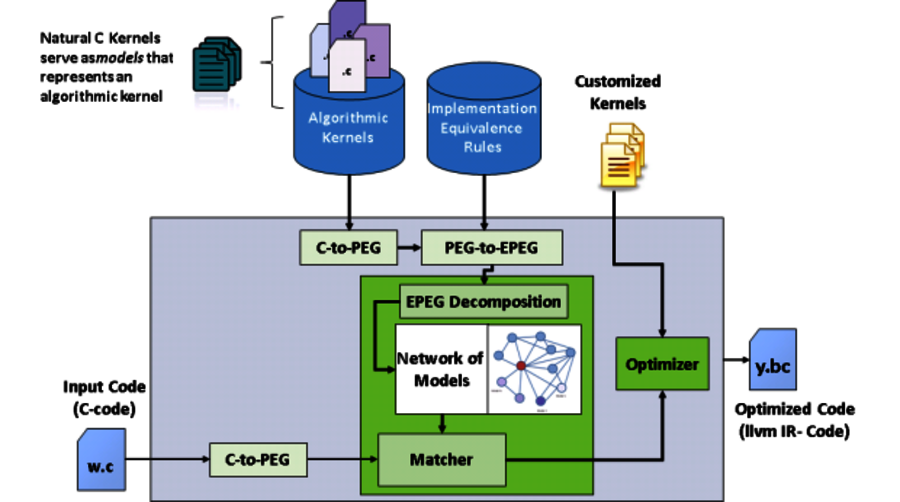

As illustrated, the input c-code is converted to a new representation: Program Expression graph (PEG), and a matcher identifies if any of the kernels is implemented within the input code. The key property of the PEG is that it allows to compactly represent multiple versions of the original code by introducing equality information in a new form of a graph called: EPEG.Therefore, every algorithmic kernel is extended with a set of equivalence axioms to obtain the corresponding EPEG, which represents many possible implementations of the algorithm kernel. This representation is then decomposed to build a network of models, which allows to identify from the input program if any of the kernels are present. This is detected regardless of the implementation details used by the programmer of the original input code, by means of a sub-graph isomorphism algorithm. Finally, for this use-case, the optimization consists of the utilization of the corresponding intrinsic function, if the kernel has been successfully identified.

In case of interest in working on this project or for more information regarding the current status, please feel free to contact us.

Contact

Publications